Machine learning force field: Theory: Difference between revisions

No edit summary |

|||

| (67 intermediate revisions by 2 users not shown) | |||

| Line 77: | Line 77: | ||

<math> | <math> | ||

g\left(\mathbf{r}\right)=\frac{1}{ | g\left(\mathbf{r}\right)=\frac{1}{\sigma_{\mathrm{atom}}\sqrt{2\pi}}\mathrm{exp}\left(-\frac{|\mathbf{r}|^{2}}{2\sigma_{\mathrm{atom}}^{2}}\right). | ||

</math> | </math> | ||

| Line 105: | Line 105: | ||

where <math>\theta</math> denotes the angle between two vectors <math>\mathbf{r}_{ij}</math> and <math>\mathbf{r}_{ik}</math> [see Fig. 2 (b)]. The important difference of the function <math>\rho_{i}^{(3)}</math> compared to the angular distribution function (also called power spectrum within the Gaussian Approximation Potential) used in reference {{cite|bartok:prl:2010}} is that no self interaction is included, where <math>j</math> and <math>k</math> have the same distance from <math>i</math> and the angle between the two is zero. It can be shown {{cite|jinnouchi:jcm:20}} that the self-interaction component is equivalent to the two body descriptors. This means that our angular descriptor is a pure angular descriptor, containing no two-body components and it cannot be expressed as linear combinations of the power spectrum. The advantage of this descriptor is that it enables us to separately control the effects of two- and three-body descriptors. | where <math>\theta</math> denotes the angle between two vectors <math>\mathbf{r}_{ij}</math> and <math>\mathbf{r}_{ik}</math> [see Fig. 2 (b)]. The important difference of the function <math>\rho_{i}^{(3)}</math> compared to the angular distribution function (also called power spectrum within the Gaussian Approximation Potential) used in reference {{cite|bartok:prl:2010}} is that no self interaction is included, where <math>j</math> and <math>k</math> have the same distance from <math>i</math> and the angle between the two is zero. It can be shown {{cite|jinnouchi:jcm:20}} that the self-interaction component is equivalent to the two body descriptors. This means that our angular descriptor is a pure angular descriptor, containing no two-body components and it cannot be expressed as linear combinations of the power spectrum. The advantage of this descriptor is that it enables us to separately control the effects of two- and three-body descriptors. | ||

=== Basis set expansion === | === Basis set expansion and descriptors === | ||

The atomic probability density can be also expanded in terms of basis functions | The atomic probability density can be also expanded in terms of basis functions | ||

| Line 146: | Line 146: | ||

</math> | </math> | ||

==== Angular filtering ==== | |||

In many cases <math>\chi_{nl}</math> is multiplied with an angular filtering function{{cite|boyd:book:2000}} <math>\eta</math> ({{TAG|ML_IAFILT2}}), which can noticably reduce the necessary basis set size without losing accuracy in the calculations | In many cases <math>\chi_{nl}</math> is multiplied with an angular filtering function{{cite|boyd:book:2000}} <math>\eta</math> ({{TAG|ML_IAFILT2}}), which can noticably reduce the necessary basis set size without losing accuracy in the calculations | ||

| Line 155: | Line 155: | ||

where <math>a_{\mathrm{FILT}}</math> ({{TAG|ML_AFILT2}}) is a parameter controlling the extent of the filtering. A larger value for the parameter should provide more filtering. | where <math>a_{\mathrm{FILT}}</math> ({{TAG|ML_AFILT2}}) is a parameter controlling the extent of the filtering. A larger value for the parameter should provide more filtering. | ||

==== Reduced descriptors ==== | |||

A descriptor that reduces the number of descriptors with respect to the number of elements{{cite|csanyi:npj:2022}} is written as | |||

<math> | |||

p_{n\nu l}^{iJ}=\sqrt{\frac{8\pi^{2}}{2l+1}} \sum\limits_{m=-l}^{l} c_{nlm}^{iJ} \sum\limits_{J'}c_{\nu lm}^{iJ'}. | |||

</math> | |||

For more details on the effects of this descriptor please see following site: {{TAG|ML_DESC_TYPE}}. | |||

== Potential energy fitting == | == Potential energy fitting == | ||

| Line 246: | Line 256: | ||

<math> | <math> | ||

\mathcal{N} \left(\mathbf{w}| \mathbf{\bar w},\mathbf{\Sigma} \right) = \frac{1}{\sqrt{ \left( 2 \pi \right)^{N_{\mathrm{B}}} \mathrm{det}\mathbf{\Sigma} }} \mathrm{exp} \left[ -\frac{ \left( \mathbf{w} - \mathbf{\bar w} \right)^{\mathrm{T}} \mathbf{\Sigma}^{-1} \left( \mathbf{w} - \mathbf{ \bar w} \right)}{2} \right] | \mathcal{N} \left(\mathbf{w}| \mathbf{\bar w},\mathbf{\Sigma} \right) = \frac{1}{\sqrt{ \left( 2 \pi \right)^{N_{\mathrm{B}}} \mathrm{det}\mathbf{\Sigma} }} \mathrm{exp} \left[ -\frac{ \left( \mathbf{w} - \mathbf{\bar w} \right)^{\mathrm{T}} \mathbf{\Sigma}^{-1} \left( \mathbf{w} - \mathbf{ \bar w} \right)}{2} \right] | ||

</math> | |||

where we use the following definitions for the center of the Guassian distribution <math>\mathbf{\bar w}</math> and the covariance matrix <math>\mathbf{\Sigma}</math> | |||

<math> | |||

\mathbf{\bar w} = s_{\mathrm{v}} \mathbf{\Sigma} \mathbf{\Phi}^{\mathrm{T}} \mathbf{Y}, | |||

</math> | |||

<math> | |||

\mathbf{\Sigma}^{-1} =s_{\mathrm{w}} \mathbf{I} + s_{\mathrm{v}} \mathbf{\Phi}^{\mathrm{T}}\mathbf{\Phi}. | |||

</math> | |||

Here we have used the following definitions | |||

<math> | |||

s_{\mathrm{v}} = \frac{1}{\sigma_{\mathrm{v}}^{2}} | |||

</math> | |||

and | |||

<math> | |||

s_{\mathrm{w}} = \frac{1}{\sigma_{\mathrm{w}}^{2}}. | |||

</math> | </math> | ||

=== Regression === | === Regression === | ||

We want to obtain the weights <math>\mathbf{w}</math> | We want to obtain the weights <math>\mathbf{\bar w}</math> for the linear equations | ||

<math> | <math> | ||

\mathbf{Y} = \mathbf{\Phi} \mathbf{w}. | \mathbf{Y} = \mathbf{\Phi} \mathbf{\bar w}. | ||

</math> | </math> | ||

==== Solution via | ==== Solution via Inversion ==== | ||

By using | By using directly the covariance matrix <math>\mathbf{\Sigma}</math> the equation from above | ||

<math> | <math> | ||

\mathbf{\ | \mathbf{\bar w} = s_{\mathrm{v}} \mathbf{\Sigma} \mathbf{\Phi}^{\mathrm{T}} \mathbf{Y}, | ||

</math> | </math> | ||

we | can be solved straightforwardly. | ||

However, only <math>\mathbf{\Sigma}^{-1}</math> is directly accesible and <math>\mathbf{\Sigma}</math> must be obtained from <math>\mathbf{\Sigma}^{-1}</math> via inversion. This inversion is numerically unstable and leads to lower accuracy. '''Hence we don't use this method.''' | |||

==== Solution via LU factorization ==== | |||

Here we directly use the invers of the covariance matrix <math>\mathbf{\Sigma}^{-1}</math> by solving the following equation for the weights <math>\mathbf{\bar w}</math> | |||

<math> | <math> | ||

\mathbf{\Sigma}^{-1} \mathbf{\bar w} = | \mathbf{\Sigma}^{-1} \mathbf{\bar w} = s_{\mathrm{v}} \mathbf{\Phi}^{\mathrm{T}} \mathbf{Y}. | ||

</math> | </math> | ||

For that <math>\mathbf{\Sigma}^{-1}</math> is decomposed into it's LU factorized components <math>\mathbf{P} * \mathbf{L} * \mathbf{U}</math>. | |||

After that the <math>\mathbf{L} * \mathbf{U}</math> factors are used to solve the previous linear equation for the weights <math>\mathbf{\bar w}</math>. | |||

This method is noticeably more accurate than the method via inversion of <math>\mathbf{\Sigma}^{-1}</math> while being on the same order of magnitude in terms of computational cost. '''Hence it is the method used in on-the-fly learning.''' | |||

==== Solution via regularized SVD ==== | |||

The regression problem can also be solved by using the singular value decomposition to factorize <math>\mathbf{\Phi}</math> as | |||

<math> | <math> | ||

\mathbf{\Phi}=\mathbf{U}\mathbf{\Lambda}\mathbf{V}^{T}. | |||

</math> | </math> | ||

To add the regularization the diagonal matrix <math>\mathbf{\Lambda}</math> containing the singular values is rewritten as | |||

<math> | <math> | ||

\tilde{\mathbf{\Lambda}}=\mathbf{\Lambda}+\frac{s_{\mathrm{w}}}{s_{\mathrm{v}}}\mathbf{\Lambda}^{-1}. | |||

</math> | </math> | ||

By using the orthogonality of the left and right eigenvector matrices <math>\mathbf{U}^{T}\mathbf{U}=\mathbf{I}_{n}</math> and <math>\mathbf{V}\mathbf{V}^{T}=\mathbf{I}_{n}</math> the regression problem has the following solution | |||

<math> | <math> | ||

\mathbf{\ | \tilde{\mathbf{w}} = \mathbf{V}\tilde{\mathbf{\Lambda}}^{-1}\mathbf{U}^{T}\mathbf{Y}. | ||

</math> | </math> | ||

=== | ==== Evidence approximation ==== | ||

Finally to get the best results and to prevent overfitting the parameters <math>\sigma_{\mathrm{v}}^{2}</math> and <math>\sigma_{\mathrm{w}}^{2}</math> have to be optimized. To achieve this, we use the evidence approximation{{cite|gull:book:1989}}{{cite|mackay:neu:2012}}{{cite|jinnouchi:pcl:2017}} (also called as empirical bayes, 2 maximum likelihood or generalized maximum likelihood), which maximizes the evidence function (also called model evidence) defined as | Finally to get the best results and to prevent overfitting the parameters <math>\sigma_{\mathrm{v}}^{2}</math> and <math>\sigma_{\mathrm{w}}^{2}</math> have to be optimized. To achieve this, we use the evidence approximation{{cite|gull:book:1989}}{{cite|mackay:neu:2012}}{{cite|jinnouchi:pcl:2017}} (also called as empirical bayes, 2 maximum likelihood or generalized maximum likelihood), which maximizes the evidence function (also called model evidence) defined as | ||

| Line 302: | Line 341: | ||

<math> | <math> | ||

E\left( \mathbf{w} \right) = \frac{ | E\left( \mathbf{w} \right) = \frac{s_{\mathrm{v}}}{2} || \mathbf{\Phi}\mathbf{w}-\mathbf{Y}||^{2} + \frac{s_{\mathrm{w}}}{ 2}||\mathbf{w}||^{2}. | ||

</math> | </math> | ||

| Line 308: | Line 347: | ||

<math> | <math> | ||

\ | s_{\mathrm{w}}=\frac{\gamma}{|\mathbf{\bar{w}}|^{2}}, | ||

</math> | |||

<math> | |||

s_{\mathrm{v}}=\frac{M-\gamma}{|\mathbf{T}-\mathbf{\phi}\mathbf{\bar{w}}|^{2}}, | |||

</math> | </math> | ||

<math> | <math> | ||

\ | \gamma=\sum\limits_{k=1}^{N_{\mathrm{B}}} \frac{\lambda_{k}}{\lambda_{k}+s_{\mathrm{w}}} | ||

</math> | </math> | ||

where <math>\lambda_{k}</math> are the eigenvalues of the matrix <math>s_{\mathrm{v}}\mathbf{\Phi}^{\mathrm{T}}\mathbf{\Phi}</math>. | |||

The evidence approximation can be done for any of the above-described regression methods, but we combine it only with the solutions from LU factorization since solutions via SVD are calculationally too expensive to be carried out multiple times. | |||

== Error estimation == | |||

=== Error estimates from Bayesian linear regression === | |||

By using the relation | |||

<math> | |||

p \left( \mathbf{y} | \mathbf{Y} \right) = \int p \left( \mathbf{y} | \mathbf{w} \right) p \left( \mathbf{w} | \mathbf{Y} \right) d\mathbf{w} | |||

</math> | |||

and the completing square method{{cite|bishop:book:2006}} the distribution of <math>p \left( \mathbf{y} | \mathbf{Y} \right)</math> is written as | |||

<math> | |||

p \left( \mathbf{y} | \mathbf{Y} \right) = \mathcal{N} \left( \mathbf{\phi}\mathbf{\bar w}, \mathbf{\sigma} \right), | |||

</math> | |||

<math> | |||

\mathbf{\sigma}=\frac{1}{s_{\mathrm{v}}}\mathbf{I}+\mathbf{\phi}^{\mathrm{T}}\mathbf{\Sigma}\mathbf{\phi}. | |||

</math> | |||

Here <math>\mathbf{\phi}</math> is similar to <math>\mathbf{\Phi}</math> but only for the new structure <math>\mathbf{y}</math>. | |||

The mean vector <math>\mathbf{\phi}\mathbf{\bar w}</math> contains the results of the predictions on the dimensionless energy per atom, forces, and stress tensor. | |||

The diagonal elements of <math>\mathbf{\sigma}</math>, which correspond to the variances of the predicted results, are used as the uncertainty in the prediction. | |||

=== Spilling factor === | |||

The spilling factor{{cite|jinnouchi2:arx:2019}}{{cite|miwa:prb:2016}} <math>s_{i}</math> is a measure of the overlap (or similarity) of a given structural environment on an atom <math> \mathbf{X}_{i}</math> with the local reference configurations <math> \mathbf{X}_{i_{\mathrm{B}}} </math> written as | |||

<math> | <math> | ||

\ | s_{i}= 1 - \frac{ \sum\limits_{i_{\mathrm{B}}=1}^{N_{\mathrm{B}}} \sum\limits_{i'_{\mathrm{B}}=1}^{N_{\mathrm{B}}} K(\mathbf{X}_{i},\mathbf{X}_{i_{\mathrm{B}}}) K^{-1}(\mathbf{X}_{i_{\mathrm{B}}}, \mathbf{X}_{i'_{\mathrm{B}}}) K(\mathbf{X}_{i'_{\mathrm{B}}},\mathbf{X}_{i}) } { K(\mathbf{X}_{i},\mathbf{X}_{i}) }. | ||

</math> | </math> | ||

If <math>\mathbf{X}_{i}</math> is fully overlapping with any of the local reference configurations then the second term on the right hand side of the above equation becomes 1 and <math> s = 0 </math>. If <math>\mathbf{X}_{i}</math> has no similarities with any of the local reference configurations the second term on the right hand side of the above equations becomes 0 and <math> s = 1 </math>. | |||

== Sparsification == | == Sparsification == | ||

Latest revision as of 08:47, 24 December 2024

Here we present the theory for on-the-fly machine learning force fields. The theory will be presented in a very condensed manner and for a more detailed description of the methods, we refer the readers to Refs. [1], [2] and [3].

Introduction

Molecular dynamics is one of the most important methods for the determination of dynamic properties. The quality of the molecular dynamics simulation depends very strongly on the accuracy of the calculational method, but higher accuracy usually comes at the cost of increased computational demand. A very accurate method is ab initio molecular dynamics, where the interactions of atoms and electrons are calculated fully quantum mechanically from ab initio calculations (such as e.g. DFT). Unfortunately, this method is very often limited to small simulation cells and simulation times. One way to hugely speed up these calculations is by using force fields, which are parametrizations of the potential energy. These parametrizations can range from very simple functions with only empirical parameters, to very complex functions parametrized using thousands of ab initio calculations. Usually making accurate force fields manually is a very time-consuming task that needs tremendous expertise and know-how.

Another way to greatly reduce computational cost and required human intervention is by machine learning. Here, in the prediction of the target property, the method automatically interpolates between known training systems that were previously calculated ab initio. This way the generation of force fields is already significantly simplified compared to a classical force field which needs manual (or even empirical) adjustment of the parameters. Nevertheless, there is still the problem of how to choose the proper (minimal) training data. One very efficient and automatic way to solve that is to adapt on-the-fly learning. Here an MD calculation is used for the learning. During the run of the MD calculation, ab initio data is picked out and added to the training data. From the existing data, a force field is continuously built up. At each step, it is judged whether to make an ab initio calculation and possibly add the data to the force field or to use the force field for that step and actually skip learning for that step. Hence the name "on the fly" learning. The crucial point here is the probability model for the estimation of errors. This model is built up from newly sampled data. Hence, the more accurate the force field gets the less sampling is needed and the more expensive ab initio steps are skipped. This way not only the convergence of the force field can be controlled but also a very widespread scan through phase space for the training structures can be performed. The crucial point for on-the-fly machine learning which will be explained with the rest of the methodology in the following subsections is to be able to predict errors of the force field on a newly sampled structure without the necessity to perform an ab initio calculation on that structure.

Algorithms

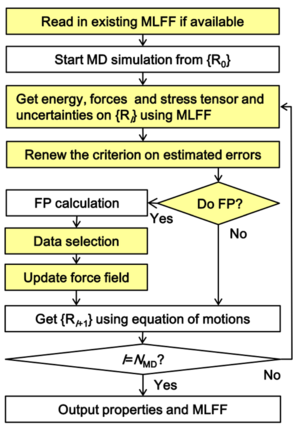

On-the-fly machine-learning algorithm

To obtain the machine-learning force field several structure datasets are required. A structure dataset defines the Bravais lattice and the atomic positions of the system and contains the total energy, the forces, and the stress tensor calculated by first principles. Given these structure datasets, the machine identifies local configurations around an atom to learn what force field is appropriate. The local configuration measures the radial and angular distribution of neighboring atoms around this given site and is captured in the so-called descriptors.

The on-the-fly force field generation scheme is given by the following steps (a flowchart of the algorithm is shown in Fig. 1):

- The machine predicts the energy, the forces, and the stress tensor and their uncertainties for a given structure using the existing force field.

- The machine decides whether to perform a first-principles calculation (proceed with step 3); otherwise we skip to step 5.

- Performing the first principles calculation, we obtain a new structure dataset that may improve the force field.

- If the number of newly collected structures reaches a certain threshold or if the predicted uncertainty becomes too large, the machine learns an improved force field by including the new structure datasets and local configurations.

- Atomic positions and velocities are updated by using the force field (if accurate enough) or the first principles calculation.

- If the desired total number of ionic steps (NSW) is reached we are done; otherwise proceed with step 1.

Sampling of training data and local reference configurations

We employ a learning scheme where structures are only added to the list of training structures when local reference configurations are picked for atoms that have an error in the force higher than a given threshold. So in the following, it is implied that whenever a new training structure is obtained, also local reference configurations from this structure are obtained.

Usually one can employ that the force field doesn't necessarily need to be retrained immediately at every step when a training structure with corresponding local configurations is added. Instead, one can also collect candidates and do the learning in a later step for all structures simultaneously (blocking). This way saving significant computational costs. Of course learning after every new configuration or after every block can have different results, but with not too large block sizes the difference should be small. The tag ML_MCONF_NEW sets the block size for learning. The force field is usually not updated at every molecular-dynamics step. The update happens under the following conditions:

- If there is no force field present, all atoms of a structure are sampled as local reference configurations and a force field is constructed.

- If the Bayesian error of the force for any atom is above the strict threshold set by ML_CDOUBML_CTIFOR, the local reference configurations are sampled and a new force field is constructed.

- If the Bayesian error of the force for any atom is above the threshold ML_CTIFOR but below ML_CDOUBML_CTIFOR, the structure is added to the list of new training structure candidates. Whenever the number of candidates is equal to ML_MCONF_NEW they are added to the entire set of training structures and the force field is updated. To avoid sampling too similar structures, the next step, from which training structures are allowed to be taken as candidates, is set by ML_NMDINT. All ab initio calculations within this distance are skipped if the Bayesian error for the force on all atoms is below ML_CDOUBML_CTIFOR.

Threshold for error of forces

Training structures and their corresponding local configurations are only chosen if the error in the forces of any atom exceeds a chosen threshold. The initial threshold is set to the value provided by ML_CTIFOR (the unit is eV/Angstrom). The behavior of how the threshold is further controlled is given by ML_ICRITERIA. The following options are available:

- ML_ICRITERIA = 0: No update of initial value of ML_CTIFOR is done.

- ML_ICRITERIA = 1: Update of criteria using an average of the Bayesian errors of the forces from history (see description of the method below).

- ML_ICRITERIA = 2: Update of criteria using gliding average of Bayesian errors (probably more robust but not well tested).

Generally, it is recommended to automatically update the threshold ML_CTIFOR during machine learning. Details on how and when the update is performed are controlled by ML_CSLOPE, ML_CSIG and ML_MHIS.

Description of ML_ICRITERIA=1:

ML_CTIFOR is updated using the average Bayesian error in the previous steps. Specifically, it is set to

ML_CTIFOR = (average of the stored Bayesian errors) *(1.0 + ML_CX).

The number of entries in the history of the Bayesian errors is controlled by ML_MHIS. To avoid noisy data or an abrupt jump of the Bayesian error causing issues, the standard error of the history must be below the threshold ML_CSIG, for the update to take place. Furthermore, the slope of the stored data must be below the threshold ML_CSLOPE. In practice, the slope and the standard errors are at least to some extent correlated: often the standard error is proportional to ML_MHIS/3 times the slope or somewhat larger. We recommend to vary only ML_CSIG and keep ML_CSLOPE fixed to its default value.

Local energies

The potential energy of a structure with atoms is approximated as

The local energies are functionals of the probability density to find another atom at the position around the atom within a cut-off radius defined as

Here is a cut-off function that goes to zero for and is a delta function.

The atom distribution can also be written as a sum of individual distributions:

Here describes the probability of find an atom at a position with respect to atom .

Descriptors

Similar to the Smooth Overlap of Atomic Positions[4] (SOAP) method the delta function is approximated as

Unfortunately is not rotationally invariant. To deal with this problem intermediate functions or descriptors depending on possessing rotational invariance are introduced:

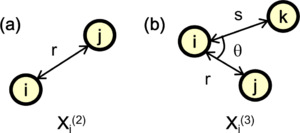

Radial descriptor

This is the simplest descriptor which relies on the radial distribution function

where denotes the unit vector of the vector between atoms and [see Fig. 2 (a)]. The Radial descriptor can also be regarded as a two-body descriptor.

Angular descriptor

In most cases the radial descriptor is not enough to distinguish different probability densities , since two different can yield the same . To improve on this angular information between two radial descriptors is also incorporated within an angular descriptor

where denotes the angle between two vectors and [see Fig. 2 (b)]. The important difference of the function compared to the angular distribution function (also called power spectrum within the Gaussian Approximation Potential) used in reference [5] is that no self interaction is included, where and have the same distance from and the angle between the two is zero. It can be shown [3] that the self-interaction component is equivalent to the two body descriptors. This means that our angular descriptor is a pure angular descriptor, containing no two-body components and it cannot be expressed as linear combinations of the power spectrum. The advantage of this descriptor is that it enables us to separately control the effects of two- and three-body descriptors.

Basis set expansion and descriptors

The atomic probability density can be also expanded in terms of basis functions

where , and denote expansion coefficients, radial basis functions and spherical harmonics, respectively. The indices , and denote radial numbers, angular and magnetic quantum numbers, respectively.

By using the above equation the radial descriptor and angular descriptor can be written as

and

where and represent normalized spherical Bessel functions and Legendre polynomials of order , respectively.

The expansion coefficients for the angular descriptor can be converted to

where denotes expansion coefficients of the distribution with respect to and

and

Angular filtering

In many cases is multiplied with an angular filtering function[6] (ML_IAFILT2), which can noticably reduce the necessary basis set size without losing accuracy in the calculations

where (ML_AFILT2) is a parameter controlling the extent of the filtering. A larger value for the parameter should provide more filtering.

Reduced descriptors

A descriptor that reduces the number of descriptors with respect to the number of elements[7] is written as

For more details on the effects of this descriptor please see following site: ML_DESC_TYPE.

Potential energy fitting

It is convenient to express the local potential energy of atom in structure in terms of linear coefficients and a kernel as follows

where is the basis set size. The kernel measures the similarity between a local configuration from the training set and basis set . Using the radial and angular descriptors it is written as

Here the vectors and contain all coefficients and , respectively. The notation indicates that it is a normalized vector of . The parameter (ML_W1) controls the weighting of the radial and angular terms, respectively. The parameter (ML_NHYP) controls the sharpness of the kernel and the order of the many-body interactions.

Matrix vector form of linear equations

Similarly to the energy the forces and the stress tensor are also described as linear functions of the coefficients . All three are fitted simultaneously which leads to the following matrix-vector form

where is a super vector consisting of the sub vectors . Here each contains the first principle energies per atom, forces and stress tensors for each structure . denotes the total number of structures. The size of is .

The matrix is also called as design matrix[8]. The rows of this matrix are blocked for each structure , where the first line of each block consists of the kernel used to calculate the energy. The subsequent lines consist of the derivatives of the kernel with respect to the atomic coordinates used to calculate the forces. The final 6 lines within each structure consist of the derivatives of the kernel with respect to the unit cell coordinates used to calculate the stress tensor components. The overall size of is looking like as follows

Bayesian linear regression

Ultimately for error prediction we want to get the maximized probability of observing a new structure on basis of the training set , which is denoted as . For this we need to get from the error of the linear fitting coefficients in the reproduction of the training data to which is explained in the following.

First we obtain from the Bayesian theorem

,

where we assume multivariate Gaussian distributions for the likelihood function

and the (conjugate) prior

The parameters and need to be optimized to balance the accuracy and robustness of the force field (see below). The normalization is obtained by

Using the equations from above and the completing square method[8] is obtained as follows

Since the prior and the likelood function are described by multivariate Gaussian distributions the posterior describes a multivariate Gaussian written as

where we use the following definitions for the center of the Guassian distribution and the covariance matrix

Here we have used the following definitions

and

Regression

We want to obtain the weights for the linear equations

Solution via Inversion

By using directly the covariance matrix the equation from above

can be solved straightforwardly. However, only is directly accesible and must be obtained from via inversion. This inversion is numerically unstable and leads to lower accuracy. Hence we don't use this method.

Solution via LU factorization

Here we directly use the invers of the covariance matrix by solving the following equation for the weights

For that is decomposed into it's LU factorized components . After that the factors are used to solve the previous linear equation for the weights . This method is noticeably more accurate than the method via inversion of while being on the same order of magnitude in terms of computational cost. Hence it is the method used in on-the-fly learning.

Solution via regularized SVD

The regression problem can also be solved by using the singular value decomposition to factorize as

To add the regularization the diagonal matrix containing the singular values is rewritten as

By using the orthogonality of the left and right eigenvector matrices and the regression problem has the following solution

Evidence approximation

Finally to get the best results and to prevent overfitting the parameters and have to be optimized. To achieve this, we use the evidence approximation[9][10][11] (also called as empirical bayes, 2 maximum likelihood or generalized maximum likelihood), which maximizes the evidence function (also called model evidence) defined as

The function corresponds to the probability that the regression model with parameters and provides the reference data . Hence the best fit is optimized by maximizing this probability. The maximization is carried out by simultaneously solving the following equations

where are the eigenvalues of the matrix .

The evidence approximation can be done for any of the above-described regression methods, but we combine it only with the solutions from LU factorization since solutions via SVD are calculationally too expensive to be carried out multiple times.

Error estimation

Error estimates from Bayesian linear regression

By using the relation

and the completing square method[8] the distribution of is written as

Here is similar to but only for the new structure .

The mean vector contains the results of the predictions on the dimensionless energy per atom, forces, and stress tensor. The diagonal elements of , which correspond to the variances of the predicted results, are used as the uncertainty in the prediction.

Spilling factor

The spilling factor[2][12] is a measure of the overlap (or similarity) of a given structural environment on an atom with the local reference configurations written as

If is fully overlapping with any of the local reference configurations then the second term on the right hand side of the above equation becomes 1 and . If has no similarities with any of the local reference configurations the second term on the right hand side of the above equations becomes 0 and .

Sparsification

Within the machine learning force field methods the sparsification of local reference configurations and the angular descriptors is supported. The sparsification of local reference configurations is by default used and the extent is mainly controlled by ML_EPS_LOW. This is procedure is important to avoid overcompleteness and to dampen the acquisition of new configurations in special cases. The sparsification of angular descriptors is by default not used and should be used very cautiously and only if it's necessary. The description of the usage of this feature is given in ML_LSPARSDES.

Sparsification of local reference configurations

We start by defining the similarity kernel (or Gram matrix) for the local configurations with each other

The CUR algorithm starts out from the diagonalization of this matrix

where is the matrix of the eigenvectors

In contrast to the original CUR algorithm[13] that was developed to efficiently select a few significant columns of the matrix , we search for (few) insignificant local configurations and remove them. We dispose of the columns of that are correlated with the eigenvectors with the smallest eigenvalues . The correlation is measured by the statistical leverage scoring measured for each column of as

where (see also ML_EPS_LOW) is the threshold for the lowest eigenvalues. One can prove (using the orthogonality of the eigenvectors and their completeness relation) that this is equivalent to the usual CUR leverage scoring algorithm, i.e. removing the least significant columns will result in those columns that are most strongly "correlated" to the largest eigenvalues.

Sparsification of angular descriptors

The sparsification of the angular descriptors is done in a similar manner as for the local reference configurations. We start by defining the square matrix

Here denotes the number of angular descriptors given by

In this equation the symmetry of the descriptors is already taken into account. The matrix is constructed from the vectors of the angular descriptors . The matrix has dimension and the matrix product is done over the elements of the local configurations.

In analogy to the local configurations the th element of the matrix is written as

In contrast to the sparsification of the local configuration and more in line with the original CUR method, the columns of matrix are kept when they are strongly correlated to the (see also ML_NRANK_SPARSDES) eigenvectors which have the largest eigenvalues . The correlation of the eigenvalues is then measured via a leverage scoring

From the leverage scorings, the ones with the highest values are selected until the ratio of the selected descriptors to the total number of descriptors becomes a previously selected value (see also ML_RDES_SPARSDES).

References

- ↑ R. Jinnouchi, J. Lahnsteiner, F. Karsai, G. Kresse, and M. Bokdam, Phys. Rev. Lett. 122, 225701 (2019).

- ↑ a b R. Jinnouchi, F. Karsai, and G. Kresse, Phys. Rev. B 100, 014105 (2019).

- ↑ a b R. Jinnouchi, F. Karsai, C. Verdi, R. Asahi, and G. Kresse, J. Chem. Phys. 152, 234102 (2020).

- ↑ A. P. Bartók, R. Kondor, and G. Csányi, Phys. Rev. B 87, 184115 (2013).

- ↑ A. P. Bartók, M.C. Payne, R. Kondor, and G. Csányi, Phys. Rev. Lett 104, 136403 (2010).

- ↑ J. P. Boyd, Chebyshev and Fourier Spectral Methods (Dover Publications, New York, 2000).

- ↑ J. P. Darby, J. R. Kermode, and G. Csanyi, Compressing local atomic neighbourhood descriptors, New Phys. J. 8, 166 (2022).

- ↑ a b c C. M. Bishop, Pattern Recognition and Machine Learning, (New York: Springer), (2006).

- ↑ S.F. Gull and J. Skilling, Maximum Entropy Bayesian Methods, Fundam. Theor. Phys., 28th ed. (Springer, Dordrecht, 1989).

- ↑ D. J. C. Mackay, Neural Computation 4, 415 (1992).

- ↑ R. Jinnouchi and R. Asahi, J. Phys. Chem. Lett. 8, 4279 (2017).

- ↑ K. Miwa and H. Ohno, Phys. Rev. B 94, 184109 (2016).

- ↑ M. W. Mahoney and P. Drineas, Proc. Natl. Acad. Sci. USA 106, 697 (2009).